Hello! I am Kangning Liu, currently a Research Scientist at Adobe. I earned my Ph.D. at the NYU Center for Data Science, where I was advised by Prof. Carlos Fernandez Granda and Prof. Krzysztof J. Geras. Before that, I earned my M.Sc. in Data Science from ETH Zurich and my B.E. in Electrical Engineering from Tsinghua University.

Research Focus: My research centers on multimodal large language models, vision foundation models, and large-scale data/annotation systems—bridging cutting-edge research with real-world impact.

My current work focuses on fine-grained image understanding through vision-language modeling, enabling applications from generative AI data creation and reward modeling to AI agent tool calling and creative workflows. For example, the "selectionByPrompt" feature [Adobe Blog] enables users to select things in an image using natural language with Adobe Photoshop in ChatGPT. My previous research on segmentation foundation models has been deployed in the latest selection tools in Adobe Photoshop [Media Review], remove background in Adobe Firefly [Feature Summary], and segmentation enhancements in Adobe Lightroom [Feature Summary] and Adobe Express—enabling users to isolate complex image elements with remarkable speed and precision.

During my Ph.D., I contributed to a range of research projects focused on learning under imperfect supervision. These include uncertainty-aware fine-tuning of segmentation foundation models (SUM), noise-resilient deep segmentation (ADELE), weakly supervised segmentation (GLAM), and unsupervised/self-supervised learning (ItS2CLR). Beyond this, my expertise extends to video analysis (StrokeRehab) and video synthesis (UVIT & Controllable Face Video Synthesis).

For more details, feel free to contact me at kangning.liu[at]nyu.edu. You can also find me on Google Scholar and LinkedIn .

Research Experience

-

Adobe

San Jose, California, USA (April 2024 - present)

Research Scientist

- Generative AI & MLLMs: Enhance the grounding ability of multimodal large language models by integrating advanced reasoning capabilities and fine-grained understanding. These models enable a wide spectrum of applications, ranging from generative AI data creation and reward modeling for GenAI training to AI agent tool calling. For example, our "selectionByPrompt" feature [Adobe Blog] enables users to select things in an image using natural language with Adobe Photoshop in ChatGPT.

- Vision Foundation Model: Developed cutting-edge segmentation technologies to enhance Adobe products. Contributions include core features deployed in Adobe Photoshop’s newest selection tools [Media Review], remove background in Adobe Firefly [Feature Summary], and segmentation enhancements in Adobe Lightroom, Adobe Express.

-

Adobe

San Jose, California, USA (May 2023 - Nov 2023)

Research Intern | Advisors: Dr. Brian Price, Dr. Jason Kuen, Dr. Yifei Fan, Dr. Zijun Wei, Luis Figueroa, Markus Woodson

- Foundation Model Development & Scale: Worked on segmentation foundation model research, processing millions of images via a model-in-the-loop data engine, potentially influencing the roadmap for features in Adobe products such as Photoshop and Lightroom.

- SOTA Performance & Quality Assurance: Achieved SOTA segmentation accuracy across 20+ test sets (5-point IoU: 0.869, surpassing SAM's 0.828). This work (SUM) was published in NeurIPS 2024 [Project Website] and established scalable feedback loops for iterative model refinement and product integration.

-

Google

Mountain View, California, USA (May 2022 - Sep 2022)

Research Intern | Advisors: Dr. Xuhui Jia, Dr. Yu-Chuan Su, Dr. Ruijin Cang

- One-shot Face Video Synthesis: Developed deep learning algorithms optimized for real-time one-shot face video synthesis, targeting efficient deployment on mobile devices.

- Quality & Control: Innovated a method enhancing geometric correspondence (7% in AKD) and overall quality (15% in AED), while incorporating a plug-in interface for flexible emotion manipulation.

-

Center for Data Science, New York University

New York, USA (Sept 2019 - March 2024)

Research Assistant | Advisors: Prof. Carlos Fernandez-Granda, Prof. Krzysztof J. Geras

- Robust Deep Learning with Noisy Labels: Engineered robust algorithms tailored for segmentation tasks, showing resilience against annotation noise. This ensures the model's reliability, especially in real-world scenarios where annotation noise is prevalent. This work (ADELE) was selected for CVPR 2022 Oral Presentation [GitHub].

- Weakly Supervised Learning: Proposed an innovative framework for multiple instance learning, which iteratively improves instance-level features by jointly estimating latent instance-level pseudo labels, and show that it outperforms existing methods on three real-world medical datasets. This work (ItS2CLR) was published in CVPR 2023 [GitHub].

- Video Understanding: Investigated and designed novel deep learning models for video action classification and segmentation, applied for the motion primitives prediction. This work (StrokeRehab) was published in NeurIPS 2022 [Paper Link].

-

Computer Vision Laboratory, ETH Zurich

Zurich, Switzerland (Oct 2018 - Aug 2019)

Research Assistant | Advisors: Prof. Luc Van Gool, Prof. Radu Timofte, Prof. Shuhang Gu

- Domain Adaptation: Improved cross-domain semantic segmentation methods for urban scene images through GAN models, harnessing weak paired information.

- Video and Image Synthesis: Achieved state-of-the-art performance in unsupervised multimodal video translation through bidirectional recurrent neural networks.

Publications

See also Google Scholar.

-

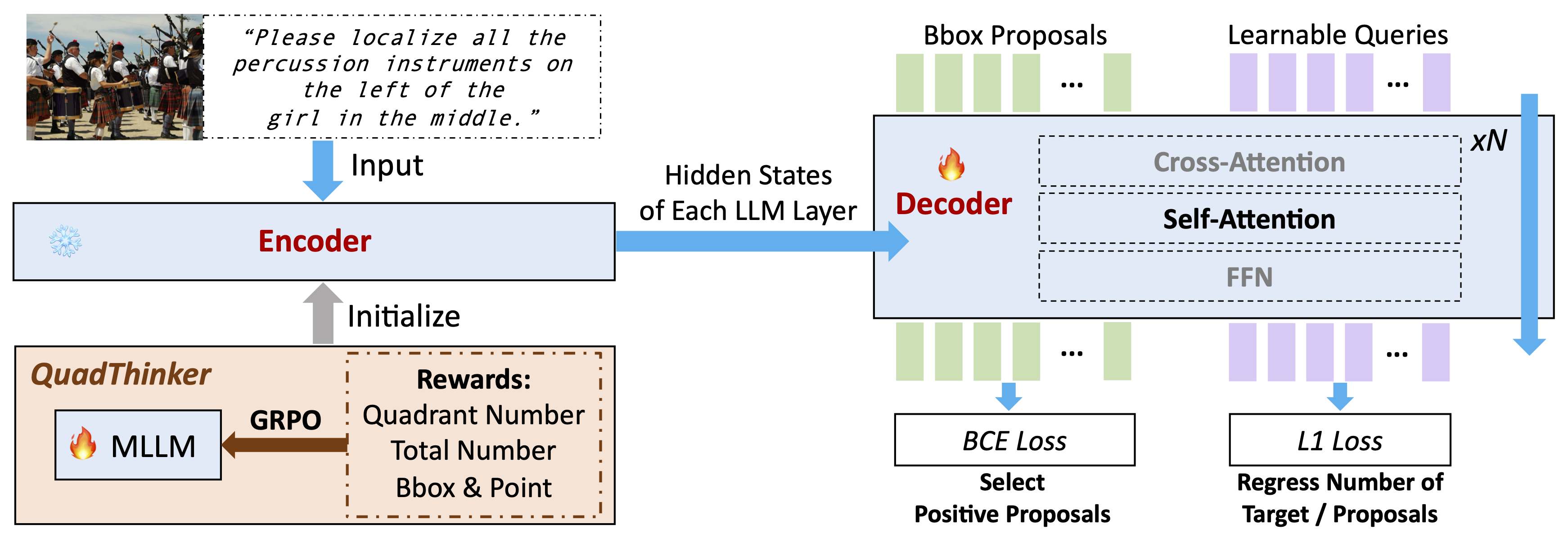

VGent: Visual Grounding via Modular Design for Disentangling Reasoning and Prediction

Weitai Kang, Jason Kuen, Mengwei Ren, Zijun Wei, Yan Yan, Kangning Liu*. (*project lead)

[Preprint]

Current visual grounding models either rely on auto-regressive MLLMs (slow with hallucination risks) or re-align LLMs with vision features (potentially undermining reasoning). In contrast, we propose VGent, a modular encoder-decoder architecture that explicitly disentangles high-level reasoning and low-level bounding box prediction. A frozen MLLM serves as the encoder for powerful reasoning, while a decoder selects target box(es) from detector-proposed candidates via cross-attention on encoder hidden states. This design leverages advances in both object detection and MLLMs, avoids auto-regressive pitfalls, and enables fast inference. We introduce: (i) QuadThinker, an RL-based training paradigm for multi-target reasoning; (ii) mask-aware labels for resolving detection-segmentation ambiguity; and (iii) global target recognition to improve target identification. VGent achieves state-of-the-art performance with +20.6% F1 improvement, +8.2% gIoU, and +5.8% cIoU gains under visual reference challenges, while maintaining constant, fast inference.

-

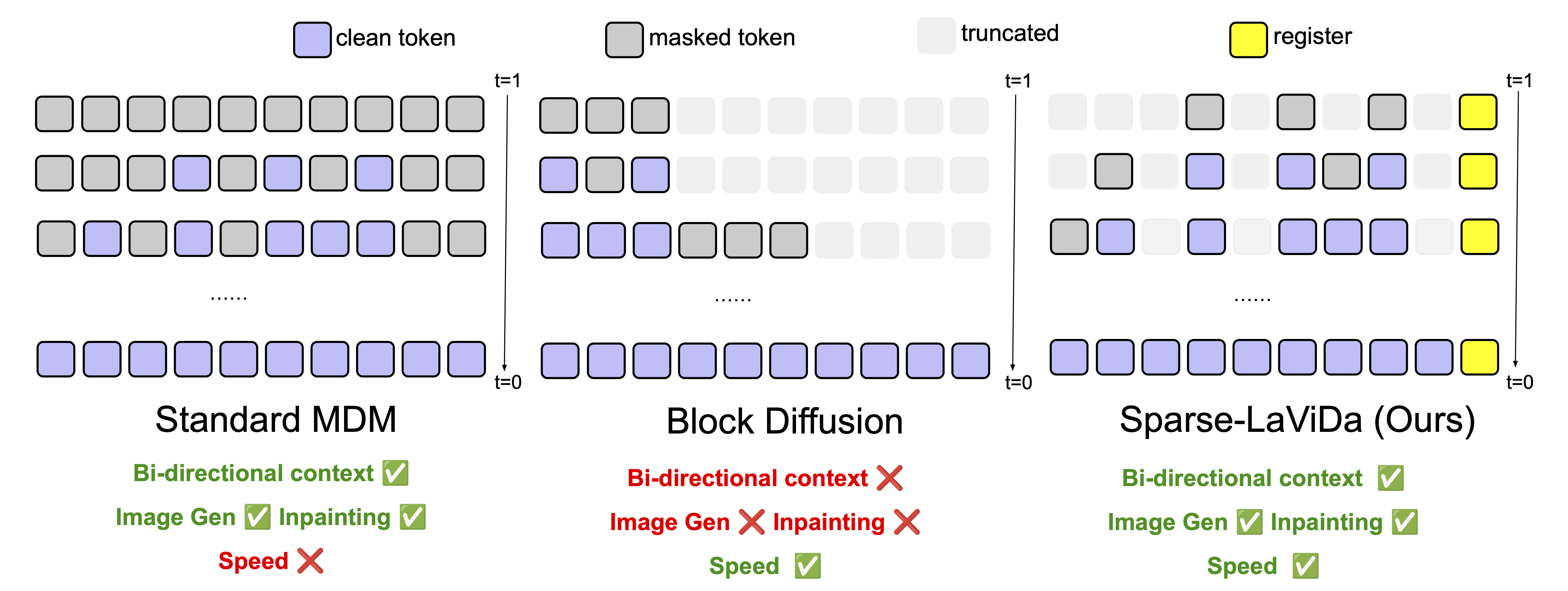

Sparse-LaViDa: Sparse Multimodal Discrete Diffusion Language Model

Shufan Li, Jiuxiang Gu, Kangning Liu, Zhe Lin, Zijun Wei, Aditya Grover, Jason Kuen.

[Preprint]

Masked Discrete Diffusion Models (MDMs) have achieved strong performance across a wide range of multimodal tasks, including image understanding, generation, and editing. However, their inference speed remains suboptimal due to the need to repeatedly process redundant masked tokens at every sampling step. In this work, we propose Sparse-LaViDa, a novel modeling framework that dynamically truncates unnecessary masked tokens at each inference step to accelerate MDM sampling. To preserve generation quality, we introduce specialized register tokens that serve as compact representations for the truncated tokens. Furthermore, to ensure consistency between training and inference, we design a specialized attention mask that faithfully matches the truncated sampling procedure during training.

-

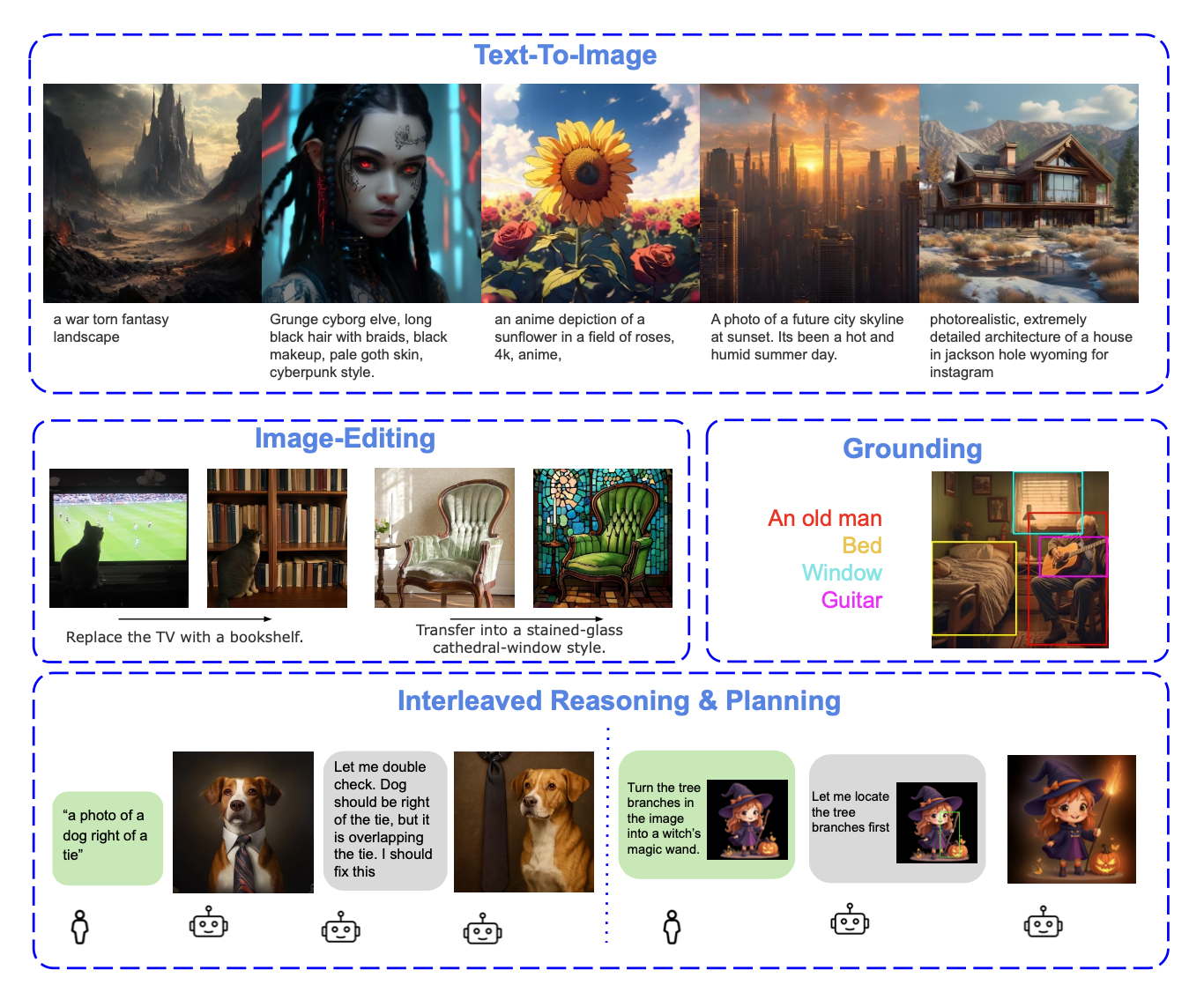

LaViDa-O: Elastic Large Masked Diffusion Models for Unified Multimodal Understanding and Generation

Shufan Li, Jiuxiang Gu, Kangning Liu, Zhe Lin, Zijun Wei, Aditya Grover, Jason Kuen.

[ICLR 2026]

We propose LaViDa-O, a unified Masked Diffusion Model (MDM) that unifies multimodal understanding and high-resolution generation (1024px) within a single framework. By incorporating a novel Elastic Mixture-of-Transformers (Elastic-MoT) architecture, LaViDa-O couples a lightweight generation branch with a robust understanding branch, enabling efficient token compression and stratified sampling. The model further leverages planning and iterative self-reflection to boost generation quality. LaViDa-O achieves state-of-the-art performance across benchmarks for object grounding (RefCOCO), text-to-image generation (GenEval), and image editing (ImgEdit), outperforming leading autoregressive and continuous diffusion models while offering considerable inference speedups.

-

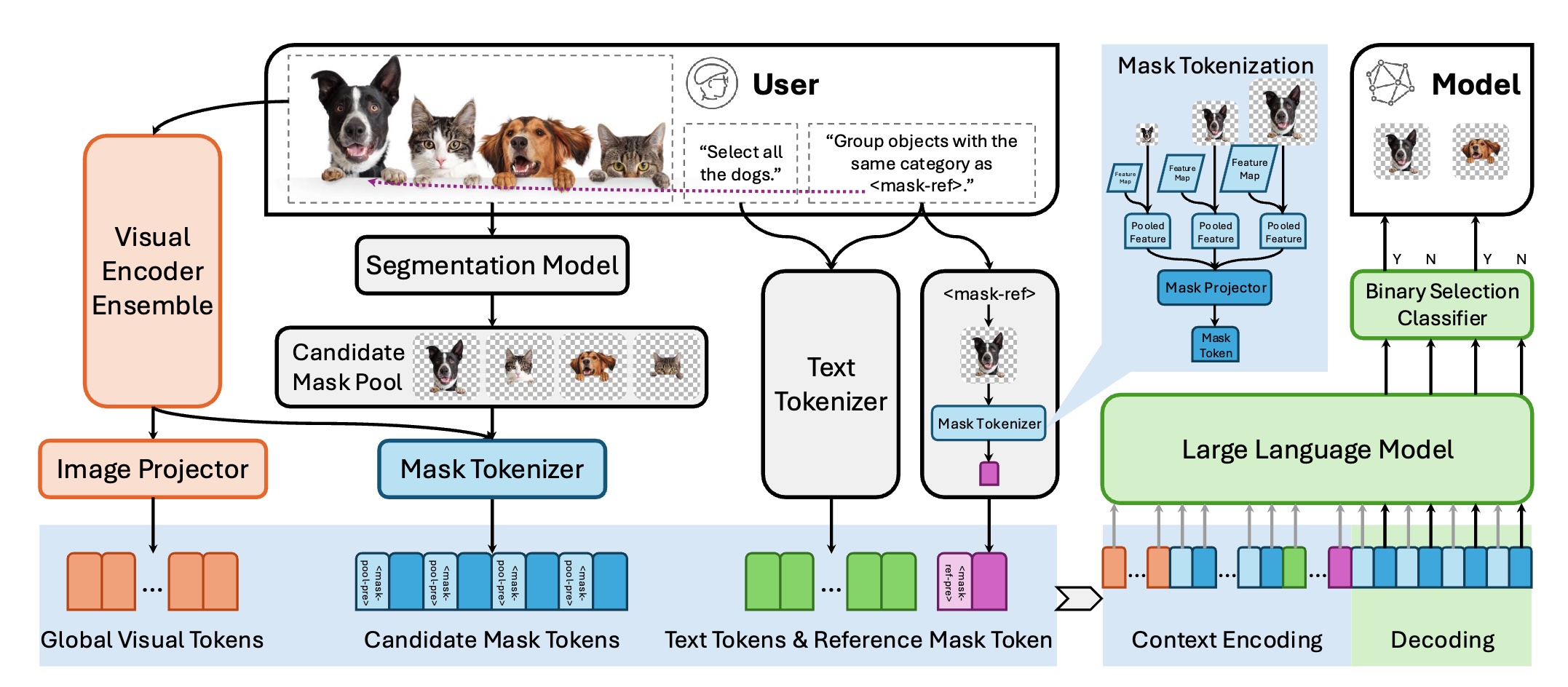

Refer to Any Segmentation Mask Group With Vision-Language Prompts

Shengcao Cao, Zijun Wei, Jason Kuen, Kangning Liu, Lingzhi Zhang, Jiuxiang Gu, HyunJoon Jung, Liang-Yan Gui, Yu-Xiong Wang

[ICCV 2025]

We introduce a novel task of omnimodal referring expression segmentation (ORES). In this task, a model produces a group of masks based on arbitrary prompts specified by text only or text plus reference visual entities. We propose a novel framework to "Refer to Any Segmentation Mask Group" (RAS), which augments segmentation models with complex multimodal interactions and comprehension via a mask-centric large multimodal model.

-

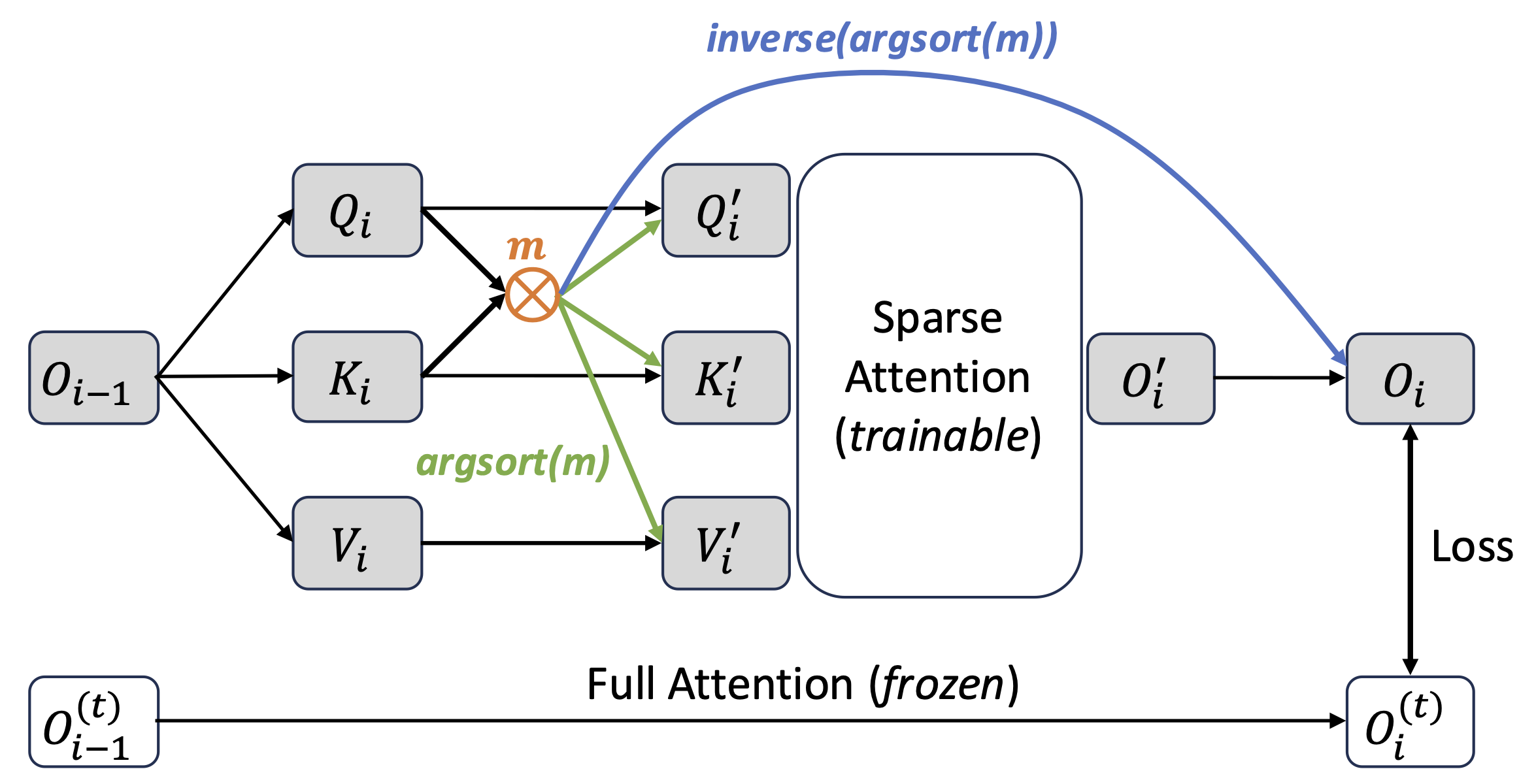

Leveraging Sparsity for Efficient Inference of High-Resolution Vision Foundation Models.

Xin Xu, Jason Kuen, Brian L. Price, Kangning Liu, Zijun Wei, Yu-Xiong Wang.

[BMVC 2025]

We introduce Sparse Vision Encoder (SVE), a post-training optimization framework that exploits naturally emerging sparsity in attention maps to accelerate inference of vision encoders. SVE selectively applies sparsity in key layers, performs sparsity distillation, and leverages cross-layer consistency to reuse sparsity structures. Experiments on DINOv2, CLIP, and SAM2 demonstrate up to 80% speedup for high-resolution encoding while preserving model performance

-

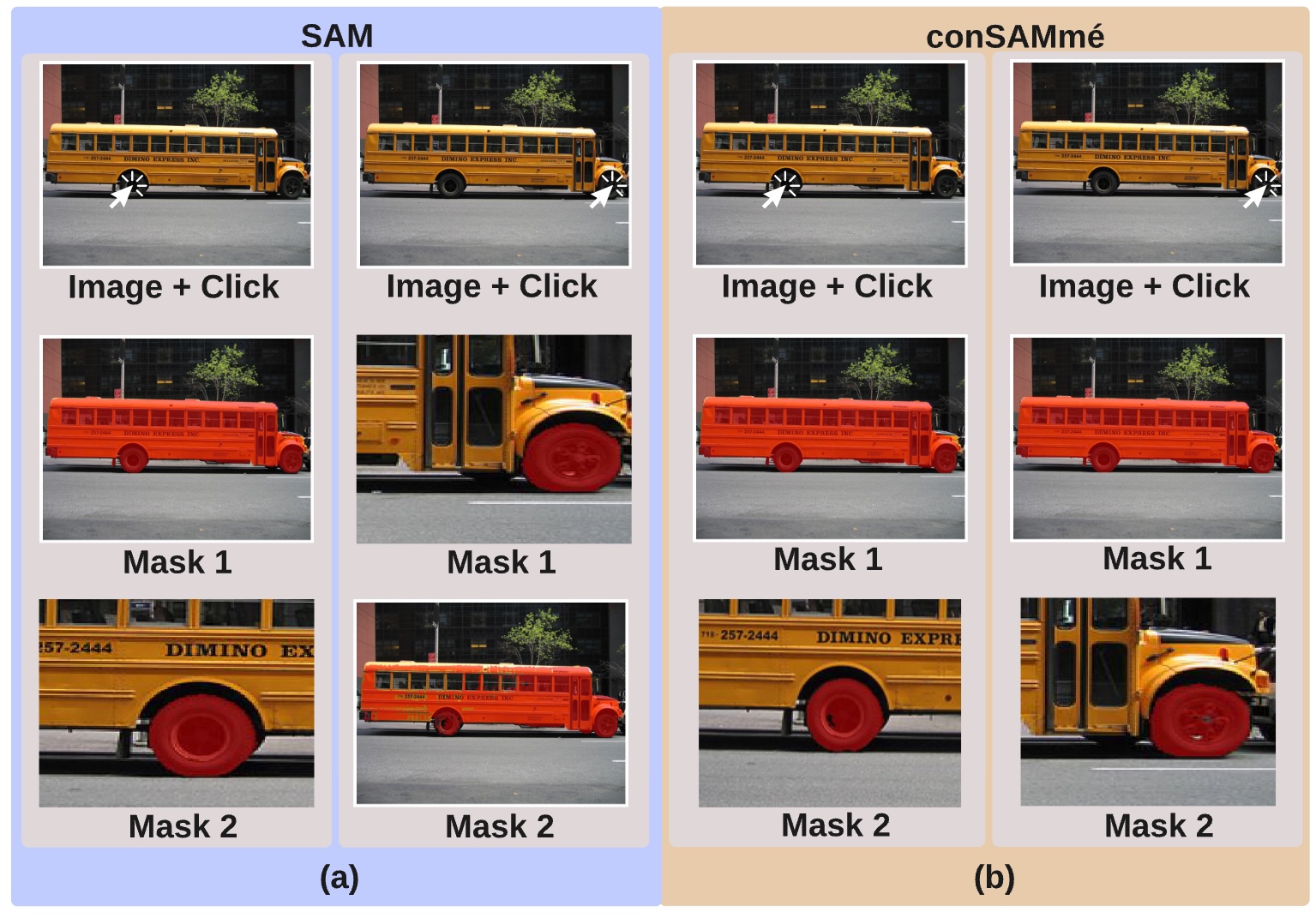

ConSAMmé: Achieving Consistent Segmentations with SAM

Josh Myers-Dean, Kangning Liu, Brian Price, Yifei Fan, Danna Gurari.

[CVPR 2025 NTIRE Workshop.]

Multi-output interactive segmentation methods generate multiple binary masks when given user guidance, such as clicks. However, it is unpredictable whether the order of the masks will match or whether those masks will be the same when given slightly different user guidance. To address these issues, we propose conSAMmé, a contrastive learning framework that conditions on explicit hierarchical semantics and leverages weakly supervised part segmentation data and a novel episodic click sampling strategy. Evaluation of conSAMmé’s performance, click robustness, and mask ordering show substantial improvements to baselines with less than 1% extra training data compared to the amount of data used for the baseline.

-

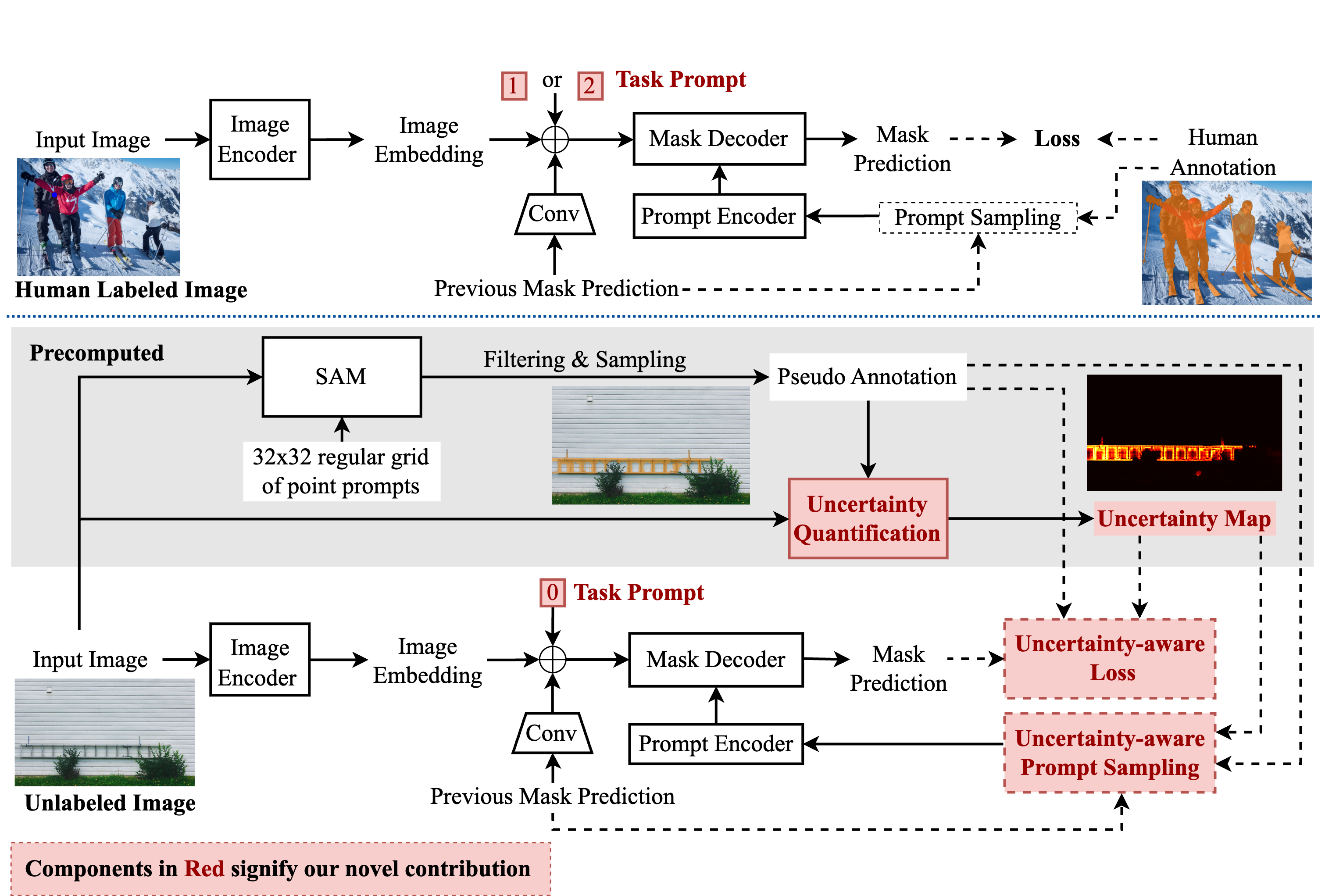

Uncertainty-aware Fine-tuning of Segmentation Foundation Models

Kangning Liu, Brian Price, Jason Kuen, Yifei Fan, Zijun Wei, Luis Figueroa, Krzysztof J. Geras, Carlos Fernandez-Granda.

[NeurIPS 2024]

The Segment Anything Model (SAM) is a large-scale foundation model that has revolutionized segmentation methodology. Despite its impressive generalization ability, the segmentation accuracy of SAM on images with intricate structures is unsatisfactory in many cases. We introduce the Segmentation with Uncertainty Model (SUM), which enhances the accuracy of segmentation foundation models by incorporating an uncertainty-aware training loss and prompt sampling based on the estimated uncertainty of pseudo-labels. We evaluated the proposed SUM on a diverse test set consisting of 22 public benchmarks, where it achieves state-of-the-art results. Notably, our method consistently surpasses SAM by 3-6 points in mean IoU and 4-7 in mean boundary IoU across point-prompt interactive segmentation rounds.

-

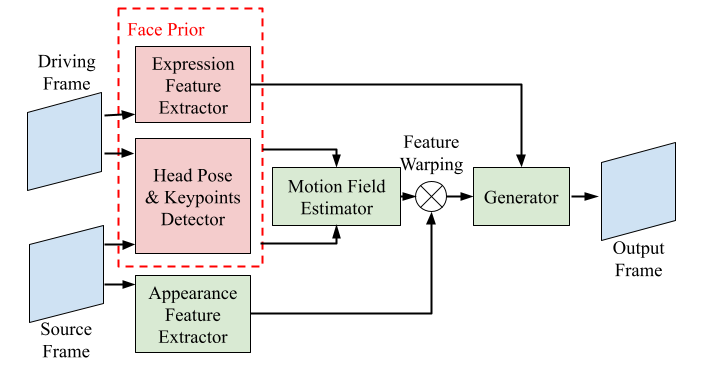

Controllable One-Shot Face Video Synthesis With Semantic Aware Prior

Kangning Liu, Yu-Chuan Su, Wei(Alex) Hong, Ruijin Cang, Xuhui Jia.

[Preprint]

We propose a method that leverages rich face prior information to generate face videos with improved semantic consistency and expression preservation. Our model improves the baseline by 7% in average keypoint distance and outperforms the baseline by 15% in average emotion embedding distance, while also providing a convenient interface for highly controllable generation in terms of pose and expression.

-

Multiple Instance Learning via Iterative Self-Paced Supervised Contrastive Learning

Kangning Liu*,Weicheng Zhu* (*Equal contribution), Yiqiu Shen, Sheng Liu, Narges Razavian, Krzysztof J. Geras, Carlos Fernandez-Granda.

[CVPR 2023]

In this paper, we introduce Iterative Self-Paced Supervised Contrastive Learning (Its2CLR), a novel method for learning high-quality instance-level representations in Multiple Instance Learning (MIL). Key features of our method: 1) self-paced learning to handle label noise and uncertainty; 2) supervised contrastive learning to learn discriminative instance-level embeddings; 3) iterative refinement of instance labels for robust and accurate classification.

-

Adaptive early-learning correction for segmentation from noisy annotations

Sheng Liu*, Kangning Liu* (*Equal contribution, order decided by coin flip.), Weicheng Zhu, Yiqiu Shen, Carlos Fernandez-Granda.

[CVPR 2022. Oral 4.2% acceptance rate]

Deep learning in the presence of noisy annotations has been studied extensively in classification, but much less in segmentation tasks. In this project, we study the learning dynamics of deep segmentation networks trained on inaccurately-annotated data and propose a new method for semantic segmentation ADaptive Early-Learning corrEction (ADELE).

-

StrokeRehab: A Benchmark Dataset for Sub-second Action Identification

Aakash Kaku*, Kangning Liu* (*Equal contribution), Avinash Parnandi*, Haresh Rengaraj Rajamohan, Anita Venkatesan, Audre Wirtanen, Natasha Pandit, Kannan Venkataramanan, Heidi Schambra, Carlos Fernandez-Granda.

[NeurIPS 2022]

We introduce a new benchmark dataset for the identification of subtle and short-duration actions. We also propose a novel seq2seq approach, which outperforms the existing methods on the new as well as standard benchmark datasets.

-

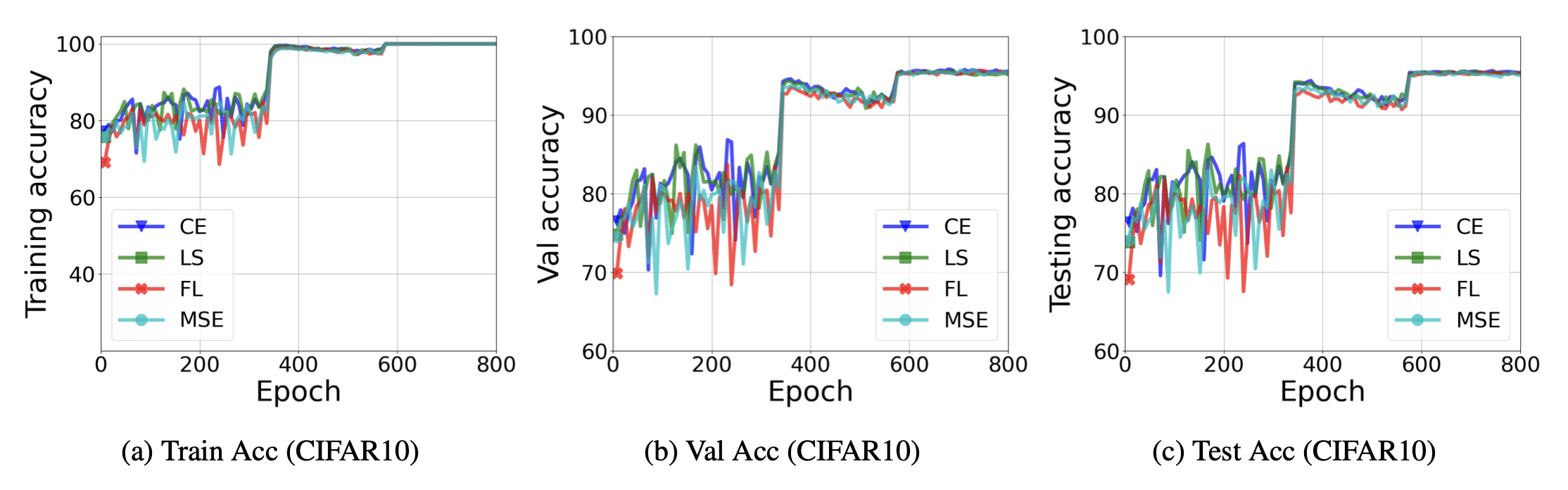

Are All Losses Created Equal: A Neural Collapse Perspective

Jinxin Zhou, Chong You, Xiao Li, Kangning Liu, Sheng Liu, Qing Qu, Zhihui Zhu.

[NeurIPS 2022]

A broad family of loss functions leads to neural collapse solutions hence are equivalent on training set; moreover, they exhibit largely identical performance on test data as well.

-

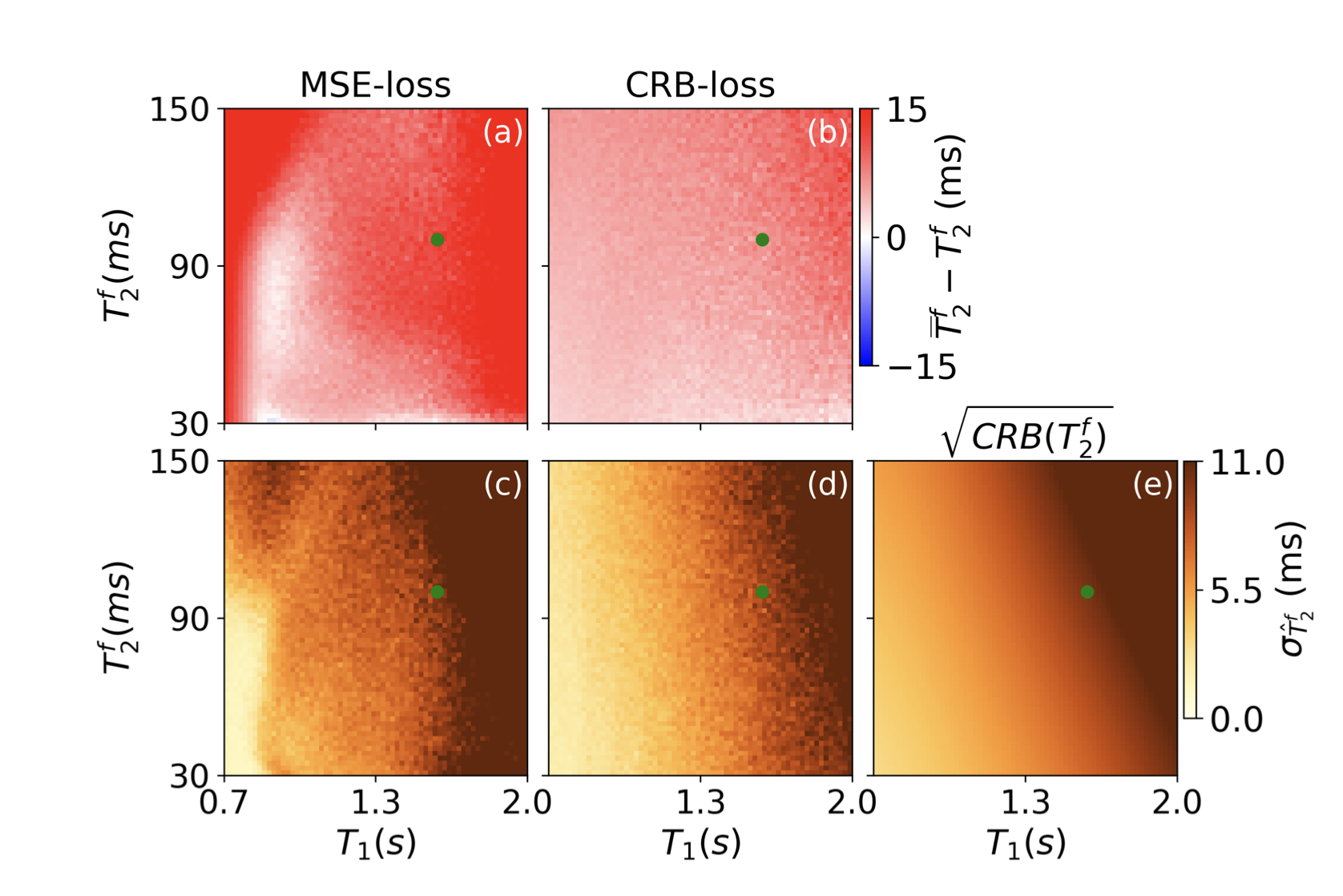

Cramér-Rao bound-informed training of neural networks for quantitative MRI

Xiaoxia Zhang*, Quentin Duchemin*, Kangning Liu* (*Equal contribution), Sebastian Flassbeck, Cem Gultekin, Carlos Fernandez-Granda, Jakob Asslander.

[Magnetic Resonance in Medicine 2021]

To address the parameter estimation problem in heterogeneous parameter spaces, we propose a theoretically well-founded loss function based on the Cramér-Rao bound (CRB), which provides a theoretical lower bound for the variance of an unbiased estimator.

-

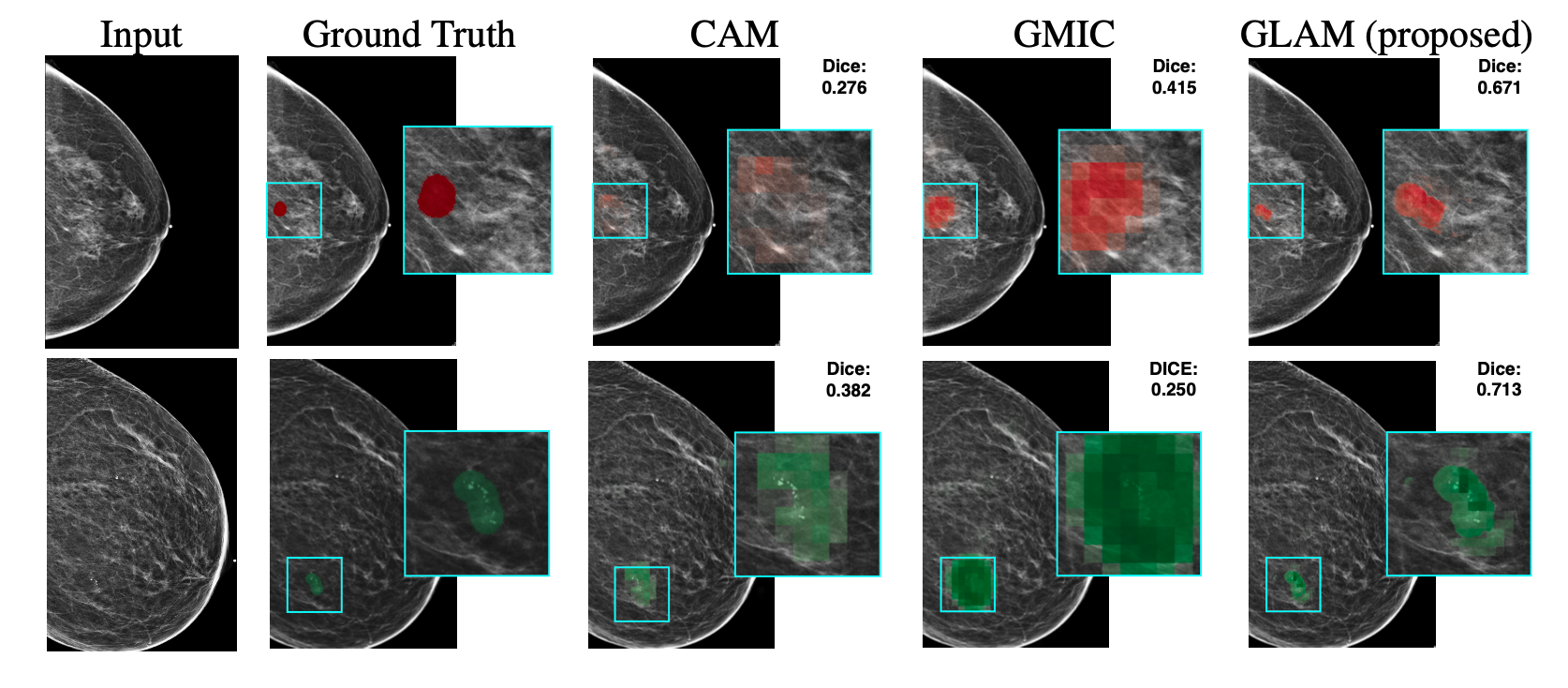

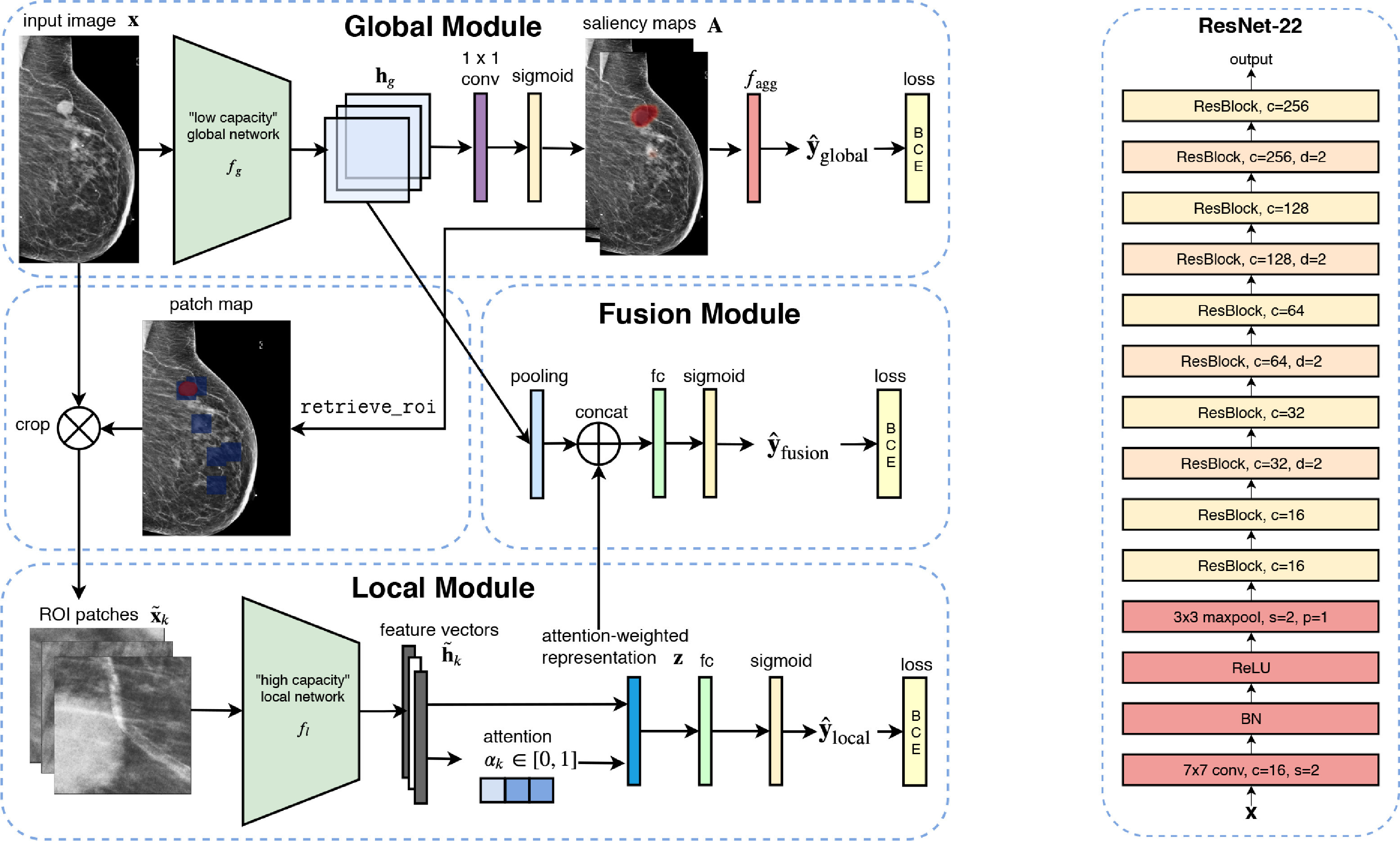

Weakly-supervised High-resolution Segmentation of Mammography Images for Breast Cancer Diagnosis

Kangning Liu, Yiqiu Shen, Nan Wu, Jakub Piotr Chdowski, Carlos Fernandez-Granda, and Krzysztof J. Geras

[Medical Imaging with Deep Learning 2021]

In this study, we unveil a new neural network model for weakly-supervised, high-resolution image segmentation. Focused on breast cancer diagnosis via mammography, our method first identifies regions of interest and then segments them in detail. Validated on a substantial, clinically-relevant dataset, our approach significantly outperforms existing methods, improving lesion localization performance by up to 39.6% and 20.0% for benign and malignant cases, respectively.

-

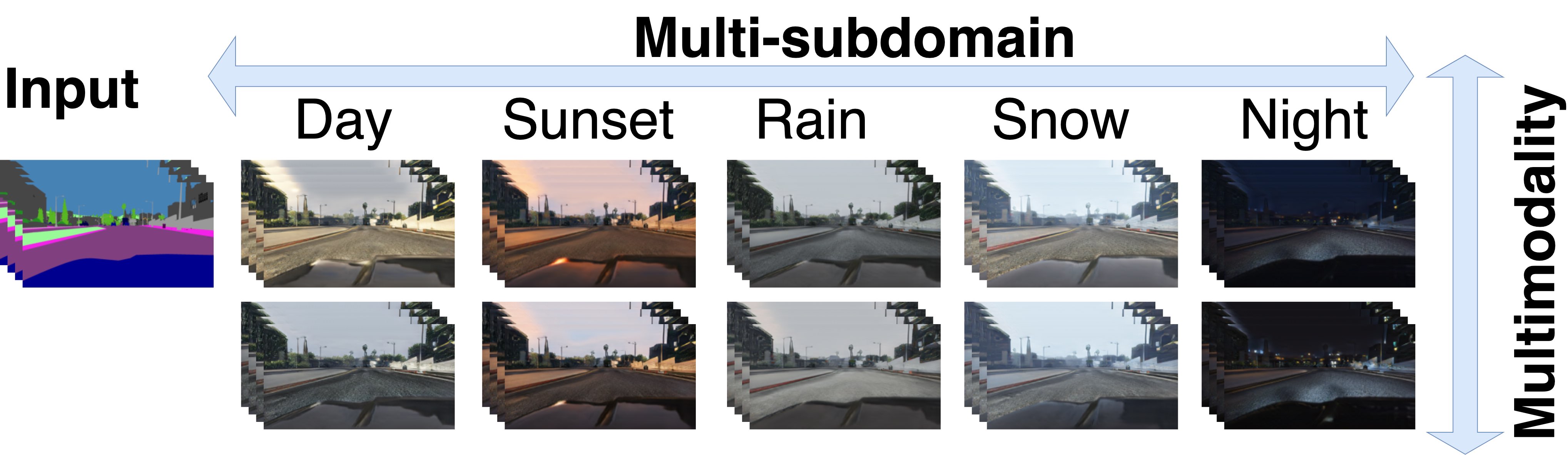

Unsupervised Multimodal Video-to-Video Translation via Self-Supervised Learning

Kangning Liu*, Shuhang Gu*(*Equal contribution), Andrs Romero, and Radu Timofte

[WACV 2021]

In this project, we introduce an unsupervised video-to-video translation model that decouples style and content. Leveraging specialized encoder-decoder and bidirectional RNNs, our model excels in propagating inter-frame details. This architecture enables style-consistent translations and offers a user-friendly interface for cross-modality conversion. We also implement a self-supervised training approach using a novel video interpolation loss that captures sequence-based temporal information.

-

An interpretable classifier for high-resolution breast cancer screening images

Shen, Yiqiu, Nan Wu, Jason Phang, Jungkyu Park, Kangning Liu, Sudarshini Tyagi, Laura Heacock et al.

[Medical image analysis, 2021]

Medical images differ from natural images in significantly higher resolutions and smaller regions of interest. Because of these differences, neural network architectures that work well for natural images might not be applicable to medical image analysis. In this work, we propose a novel neural network model to address these unique properties of medical images.

Teaching

-

Probability and Statistics for Data Science, NYU, Center for Data Science

Teaching Assistant (Sept - Dec 2021)

-

Advanced Machine Learning, ETH Zurich, Department of Computer Science

Teaching Assistant (Sept - Dec 2018)

Service

-

Award

Top Reviewer for NeurIPS 2024

-

Conference Reviewer for

CVPR, ICCV, ECCV, NeurIPS, ICLR, ICML, AISTATS, AAAI, WACV

-

Journal Reviewer for

TPAMI,TMLR, TNNLS, CVIU

Miscellaneous

-

Capturing moments and exploring light with my Sony A7M4.

Pageviews